In a previous article I wrote about an experiment where I trained a neural network to play a card game. As a follow up to this project, I figured it would be fun to see if I could get an LLM to play the game instead. This post is a write up of how I arrived at a successful LLM based implementation of the original card game.

Card Game

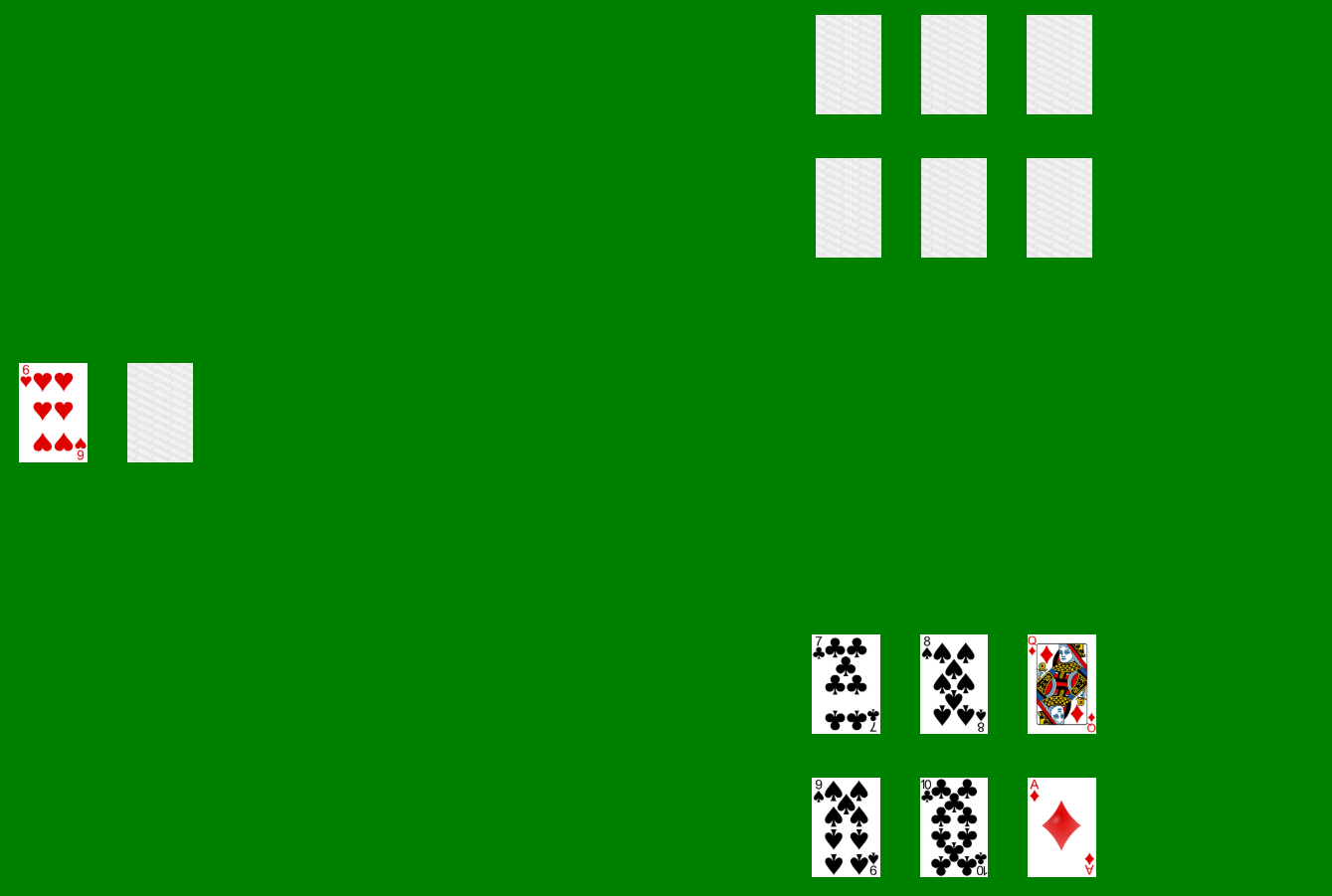

Briefly summarized, the game I am playing is called Palace, a simple but fun game where the objective is to get rid of all your cards by playing cards of equal or greater face value than your opponent. Suits don’t matter, but 2s and 10s have special properties that allow them to be played on top of any card. In case you are interested in reading the original article, it can be found here.

One common strategy when playing the game is to preserve your high cards and focus on playing the card with the lowest possible face value first. This reduces the risk of not being able to make a play later when your opponent plays a high card. When following this strategy, picking the next card breaks down to picking the smallest card of equal or greater face value than the card that was just played by your opponent.

In the following section I will discuss how I integrated a local LLM (Llama 3.1 8B) with the game to implement the next card selection algorithm. Any LLM will do, but I decided to go with Llama 3.1 8B since I needed something that will run on my local desktop and play nicely with my modest GPU with only 8GB of VRAM.

Picking Cards with LLMs

Since suits don’t matter in this game, we can reduce the problem space to picking numbers instead of cards. My view is that this simplifies the integration with the LLM since the LLM doesn’t even need to know that it’s participating in a card game. All the LLM needs to take care of is inferring the smallest possible numeric value from an array of numbers (i.e. values: 2-14) that that can be mapped to cards in the UI (e.g. 13 = King, 14 = Ace, etc.).

First Experiment - Prompting with RAG

My initial thought was that I would be able to implement this by relying on regular prompting and LLM tool calling. Tool calling would be used to enforce a schema on the generated response, which would be useful for integrating the LLM with the game programmatically. Note: In case you are unfamiliar with tool calling, you might want to check out one of my other articles here.

In the prompt I defined the array (e.g. [3, 4, 5, 6]) and a current “card” with a numerical value (e.g. 4) to compare against to select the smallest possible value from the array. In addition to the numeric values from the UI I enriched the prompt with fixed RAG content behind the scenes to tell the LLM how to compare the numbers.

My initial results were actually pretty good since the LLM generally did a good job picking the correct value and feed it into the tool function. However, I noticed on multiple occasions that the LLM would struggle with the numerical comparisons and select the wrong number from the array. A common scenario was picking a higher value than necessary, which would result in playing a high card where you expected to play a low card. I also noticed cases where the LLM would pick values that were too low, or not even present in the play list. The latter category of issues directly interferes with the game experience since it would result in invalid plays from the LLM.

To improve on the results, I tried implementing some common “prompt engineering” techniques like few shotting with multiple examples of correct selections etc. I also tried making the prompt very specific and even added a few self-check instructions.

While these techniques resulted in noticeable improvements, it was still very challenging to complete a full game without error since the LLM would reliably make a few illegal plays during each game.

After multiple iterations I ended up with a very complex prompt in the tool function as seen below. (See my other article for details on how tool functions are called by LLMs and integrated with the top level prompt).

The prompt started out simple but grew in complexity for every iteration. I suspect I could have made further improvements to this by continuing to work on the prompt, but I was struggling to get it to perform well enough to complete a full game.

Second Experiment - Fine-Tuning the LLM

After struggling with the initial prompt-based implementation for a while, I decided to shift gears and try something different. Instead of relying solely on prompting I wanted to see if fine-tuning the LLM would be an appropriate solution here. My hope was that fine-tuning would improve on the LLM's ability to compare numbers and prevent the incorrect results.

There are a few different ways to do fine-tuning of an LLM. The most extreme version is to do full retraining of the entire network, which would be unrealistic for my personal project, even for the Llama 8B version. However, a more realistic approach is to use Lora or QLora where only a small subset of the LLM is retrained while keeping most of the original weights frozen. Lora and QLora are similar, with the main difference that QLora does quantization. A key benefit of this is that the quantization will reduce the memory footprint of the fine-tuning since the precision of the weights is reduced to 4 bits.

Given my limited hardware I decided to go with QLora using the Unsloth framework.

Fine-tuning data

The first step is coming up with a dataset for fine-tuning. In this particular project I decided to go with the Alpaca format. Alpaca consist of an array of json objects with three properties: Instruction, Input and Output.

See the example below as a reference:

The instruction node tells the LLM how to compare the data defined in the input node. Finally, the output node defines the expected outcome. You can think of this as supervised fine-tuning sine you are training using labeled data pairs of defined input and expected output.

Below is the full fine-tuning script:

The script above is a based on the code from this excellent article from HugginFace. I had to make a few tweaks to implement my own requirements, but conceptually the implementation is still very similar.

My local desktop is pretty modest in terms of resources (8GB VRAM), but fine-tuning with this dataset is still pretty fast (roughly 10 min end-to-end). I had to experiment with the size of my dataset, but in the end I landed on 300 samples in my full dataset.

Once the fine-tuning is done, how do we use the new model in the application?

The output of the fine-tuning process is a GGUF file that can be imported into Ollama by running the following command against the docker hosted Ollama process:

Once the profile is created in Ollama, the LLM can be loaded in Ollama just like any other Ollama supported LLM. See the code listing below as a reference for how to load the new model called custom_llama_model.

The prompt I am using when generating responses using the final fine-tuned model is identical to the Alpaca prompt used during fine-tuning traing as seen below:

For more information on how to run Ollama and Llama locally, please check out my other article here:

Conclusion

After fine-tuning the model, I started seeing really good results and was able to complete multiple games back-to-back without any “cheating” from the LLM. I have also added a pretty compressive test suite of play scenarios to further build confidence in the accuracy of the fine-tuned model.

My theory for why fine-tuning works in this scenario is that LLMs in general have a hard time comparing numeric values reliably, but by fine-tuning the LLM on a numeric dataset for a very specific use case, overall accuracy can improve greatly.

Screenshot of the game below:

Helpful References: