In this post I will share a simple demo project where I integrated multiple external agents over A2A in a chatbot.

The main objective of this experiment is to explore ways to enrich a standard chatbot with new capabilities through integration of external agents over A2A (Agent 2 Agent). Specifically, I want to add support for news generation and currency conversions in the chatbot by bringing in two agents with those capabilities.

If you are new to the concept of A2A, you may want to check out one of my previous articles for a quick primer.

Agent Integration

How does the chatbot know which agents are available?

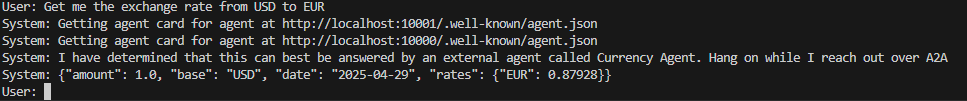

In my current implementation I start by downloading the agent cards to discover the capabilities of each agent. In this sample implementation the agent cards are fetched from A2A servers running on http://localhost:10001 and http://localhost:10000.

Next I feed the chat prompt in combination with the agent card descriptions into a local LLM (qwen 3) where I ask the LLM to pick an appropriate agent through tool calling. If a matching agent is found based on the chat prompt, a request will go out to the external agent using the proposed A2A protocol. If none of the external agents are a match, I let the chatbot fall back to interacting with a local LLM (Llama 3.2) for standard chat features.

I have included the code for selecting the agent below. The output of the select_agent function is a client that can be used to interact with the underlying agent. For remote agents the client instance will be of type A2AClient. You may recall A2AClient as one of the classes from the original A2A repo provided by Google. In order to access the A2A classes directly, I added the original repo from Google as a Git sub-module to my demo repo.

A2A only applies to external agents, so in cases where no agents are matched to the prompt, a custom root client is returned to facilitate communication with the Llama 3.2 instance.

from A2A.samples.python.common.client.card_resolver import A2ACardResolver

from A2A.samples.python.common.client.client import A2AClient

urls = [

"http://localhost:10001",

"http://localhost:10000"

]

class AgentContext:

_instance = None

_initialized = False

def __new__(cls, *args, **kwargs):

if cls._instance is None:

cls._instance = super().__new__(cls)

return cls._instance

def __init__(self):

if not self._initialized:

self.clients = {}

self.agent_summary = []

for url in urls:

resolver = A2ACardResolver(url)

card = resolver.get_agent_card()

self.clients[card.name] = A2AClient(card)

self.agent_summary.append({"name": card.name, "description": card.description, "url": card.url})

AgentContext._initialized = True

class RootClient:

async def send_task(self, payload):

llm = init_llm()

query = payload["message"]["parts"][-1]["text"]

response = llm.invoke(query)

response = {

"result": {

"id": payload["sessionId"],

"status": {"state": "completed", "timestamp": datetime.now().timestamp()},

"artifacts":[{"parts": [{"text": response.content}]}]

}

}

return response

class RootAgent:

def __init__(self):

self.model = init_llm_with_tool_calling()

def select_agent(self, request: str):

agent_context = AgentContext()

prompt = f"""

You are a expert delegator that can delegate the user request to the appropriate remote agents. The request from the users is {request}.

Make sure to use the tool called select_current_agent to select a single agent, based on the agent descriptions, that will best serve the request.

Here are the descriptions of the agents to chose from {agent_context.agent_summary}.

If you are unable to pick an appropriate agent based on the agent descriptions, please respond select the option "root_agent".

"""

result = self.model.invoke(prompt)

tool_call = result.tool_calls[0]

agent_name = tool_call["args"]["current_agent"]

if agent_name == "root_agent":

client = RootClient()

else:

print(f"System: I have determined that this can best be answered by external agent called {agent_name}. Hang on while I reach out over A2A")

client = tools_names[tool_call["name"]].invoke(tool_call["args"])

return client

Upon selecting an agent based on the prompt and agent descriptions, the LLM will generate a tool call. Inside the tool function I am mapping the agent name to the corresponding client as seen below.

from pydantic import Field

from langchain_core.tools import tool

from agent.agent_context import AgentContext

@tool

def select_current_agent(current_agent = Field(description="The most appropriate agent to use for the assigned task")):

"""Selects the best agent for the task at hand"""

agent_context = AgentContext()

a2a_client = agent_context.clients[current_agent]

return a2a_client

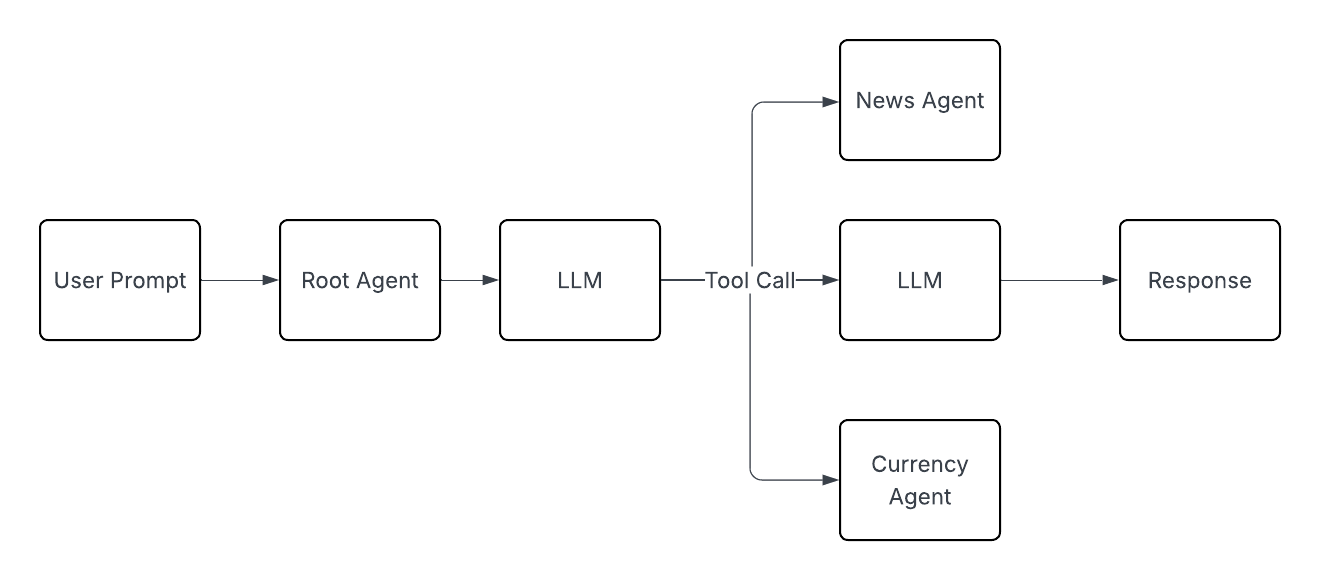

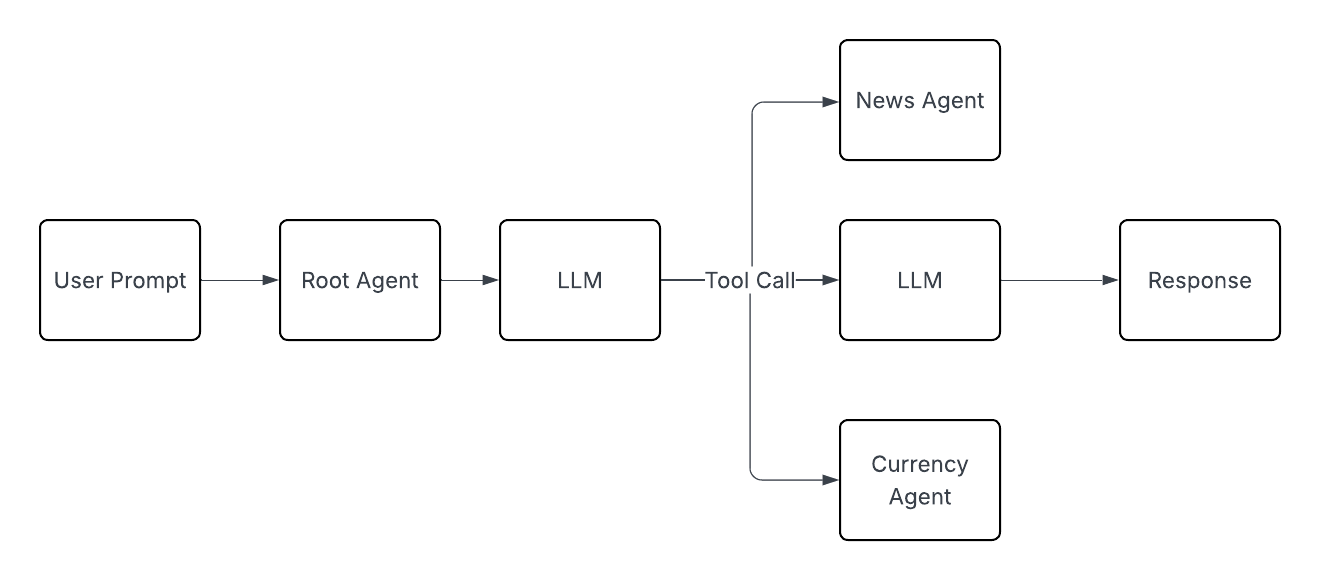

I have also included a simple flow diagram below to show the flow of agent selection.

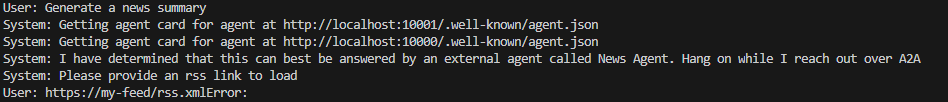

The code for the chatbot loop is included below. The key point here is the select_agent function where I pass in the user prompt to try to match it with the capabilities of an external agent. It's also worth noting that the message returned from the agent supports a status called "Input required". I make use of this in the news agent to request a RSS link from the user.

async def completeTask(client, prompt, taskId, sessionId, resume):

payload = {

"id": taskId,

"sessionId": sessionId,

"acceptedOutputModes": ["text"],

"message": {

"role": "user",

"parts": [

{

"type": "text",

"text": prompt,

}

],

},

"resume": resume

}

taskResult = await client.send_task(payload)

if isinstance(taskResult, SendTaskResponse):

result = json.loads(taskResult.model_dump_json(exclude_none=True))

state = TaskState(taskResult.result.status.state)

if state.name == TaskState.INPUT_REQUIRED.name:

if result["result"]["status"]["state"] == "input-required":

for p in result["result"]["status"]["message"]["parts"]:

print(f"System: {p["text"]}")

prompt = input("User: ")

return await completeTask(client=client, prompt=prompt, taskId=taskId, sessionId=sessionId, resume=True)

else:

result = taskResult

if result["result"]["status"]["state"] == "completed":

for a in result["result"]["artifacts"]:

for p in a["parts"]:

print(f"System: {p["text"]}")

return True

async def chat_loop():

continue_loop = True

root_agent = RootAgent()

while continue_loop:

try:

task_id = uuid4().hex

session_id = uuid4().hex

prompt = input("User: ")

if prompt == ":q" or prompt == "quit":

return False

client = root_agent.select_agent(prompt)

continue_loop = await completeTask(client=client, prompt=prompt, resume=False, taskId=task_id, sessionId=session_id)

except Exception as e:

print(f"Error: {e}")

break

Demo

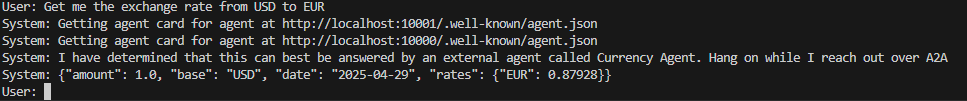

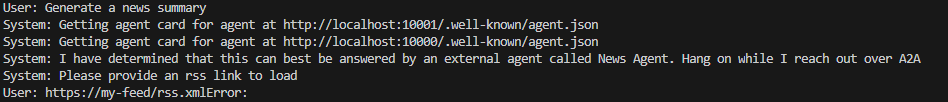

As you can see from the screenshots below the chatbot will successfully map prompts to the appropriate external agents. In the case of the news agent there is also a back and forth between the user and the agent where the agent requests a rss feed url from the user.

In the fallback case the chatbot just defaults to regular LLM interaction as seen below:

Code

I have included the full project code on Github. Notice that I have included the original A2A repo from Google as a Git submodule in the project to gain access to the A2A code provided by Google.